Open Science

Basic principles and best practices

Dr. Domenico Giusti

Paläoanthropologie, Senckenberg Centre for Human Evolution and Palaeoenvironment

Course roadmap

4. Reproducible research and data analysis

Outline

- Definitions

- Rationale

- Summary

- FAQ

- Food for thought

- Practical exercises

Definitions

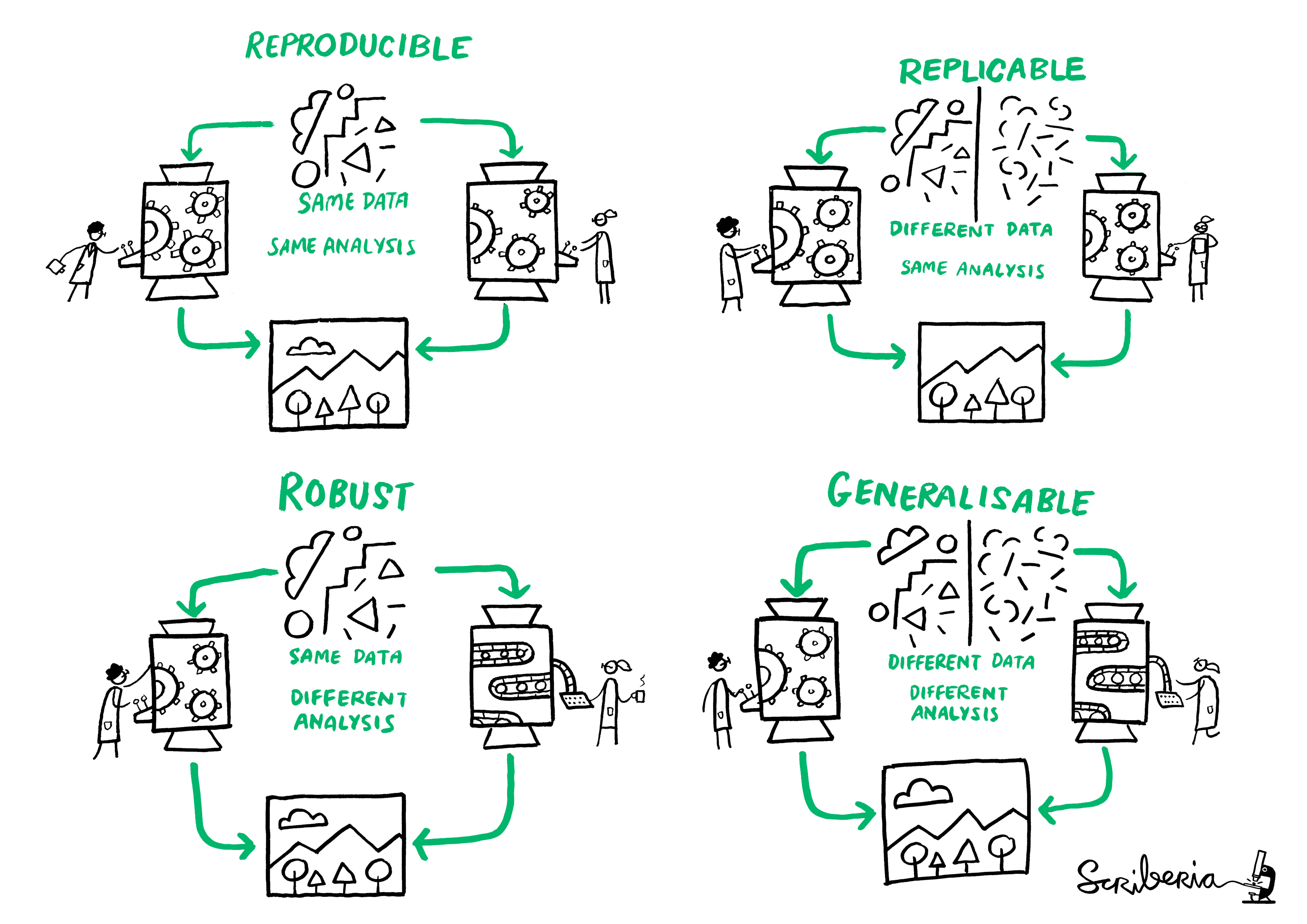

This image was created by Scriberia for The Turing Way community and is used under a CC-BY licence

Definitions

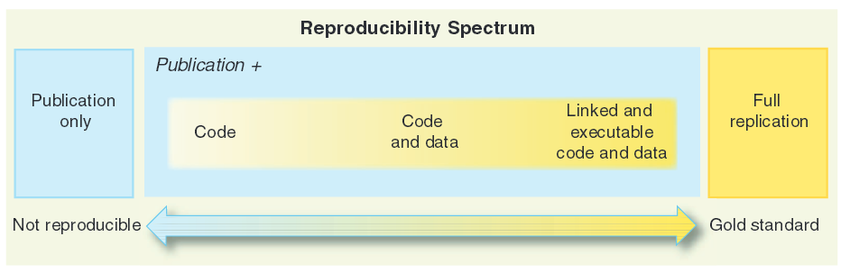

- Reproducible research - A result is reproducible when the same analysis steps performed on the same dataset consistently produces the same answer.

- Replicable research - A result is replicable when the same analysis performed on different datasets produces qualitatively similar answers.

- Robust research - A result is robust when the same dataset is subjected to different analysis workflows to answer the same research question and a qualitatively similar or identical answer is produced.

- Generalisable research - A result is generalisable when it is not dependent on a particular dataset nor a particular version of the analysis pipeline.

Definitions

- Computational reproducibility: When detailed information is provided about code, software, hardware and implementation details.

- Empirical reproducibility: When detailed information is provided about non-computational empirical scientific experiments and observations. In practice, this is enabled by making the data and details of how it was collected freely available.

- Statistical reproducibility: When detailed information is provided, for example, about the choice of statistical tests, model parameters, and threshold values. This mostly relates to pre-registration of study design to prevent p-value hacking and other manipulations.

A problem with any one of these three types of reproducibility [...] can be enough to derail the process of establishing scientific facts. Each type calls for different remedies, from improving existing communication standards and reporting (empirical reproducibility) to making computational environments available for replication purposes (computational reproducibility) to the statistical assessment of repeated results for validation purposes (statistical reproducibility).

Stodden 2014 [Online; accessed 20 May 2021]

Reproducibility is at the core of the scientific method

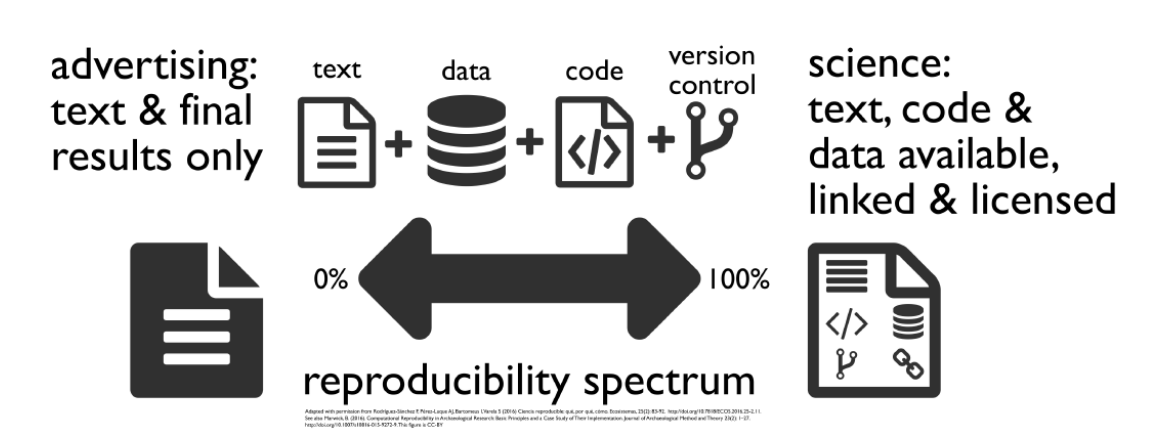

An article (about computational result) is advertising, not scholarship. The actual scholarship is the full software environment, code and data, that produced the result.

Buckheit & Donoho 1995

Reproducibility leads to increased rigour and quality

This image was created by Scriberia for The Turing Way community and is used under a CC-BY licence

Reproducibility advantages for individual researchers

-> F. Markowetz - 5 selfish reasons to work reproducibly [Online; accessed 20 May 2021]

Rationale

How computers broke science – and what we can do to fix it [Online; accessed 20 May 2021]

Reproducibility of scientific results in EU

"Overall the report introduces the concept of reproducibility as a continuum of practices".

"It is posited that the reproducibility of results has value both as a mechanism to ensure good science based on truthful claims, and as a driver of further discovery and innovation." Reproducibility of scientific results in the EU

Reasons to Share

- Encouraging scientific advancement

- Being a good community member

- Potential to encourage others to work on the problem

- Encouraging sharing and having others share with you

- The potential to set a standard for the field

- Improvement in the caliber of research

- Increase in publicity, track metrics of impact

- Opportunity to get feedback on your work

- Potential for finding collaborators

- Normalizing understanding in a field

- To reproduce or to verify research

- To make the results of publicly funded research availableto the public

- To enable others to ask new questions of extant data

- To advance the state of research and innovation

Reasons Not to Share

- The time it takes to clean up and document data for release

- The possibility that your data may be used without citation

- Legal barriers, such as copyright

- Time to verify privacy or other administrative dataconcerns

- The potential loss of future publications using these data

- Competitors may get an advantage

- Dealing with questions from users about the data

- Technical limitations, i.e., Web platform space constraints

- Intense competition in the topic

- Investment of large amount of work building the dataset

- Insufficient perceived reward, such as promotion or subsequent citation

- Effort in documenting

- Concerns for priority, including control of results and sources

- Intellectual property issues

Promoting an open research culture

A likely culprit for this disconnect [embracing, but not practicing open science principles] is an academic reward system that does not sufficiently incentivize open practices.

Nosek et al. 2015

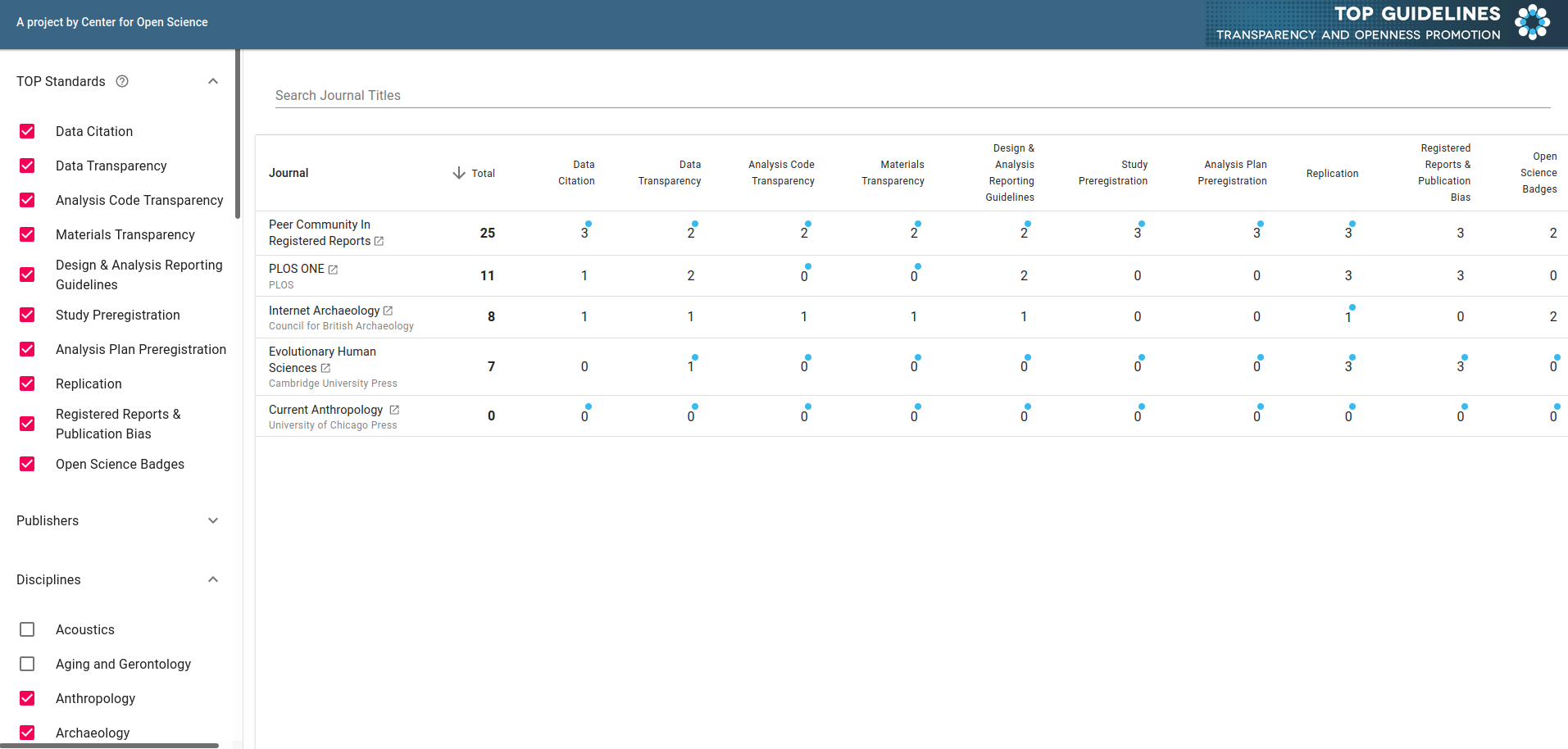

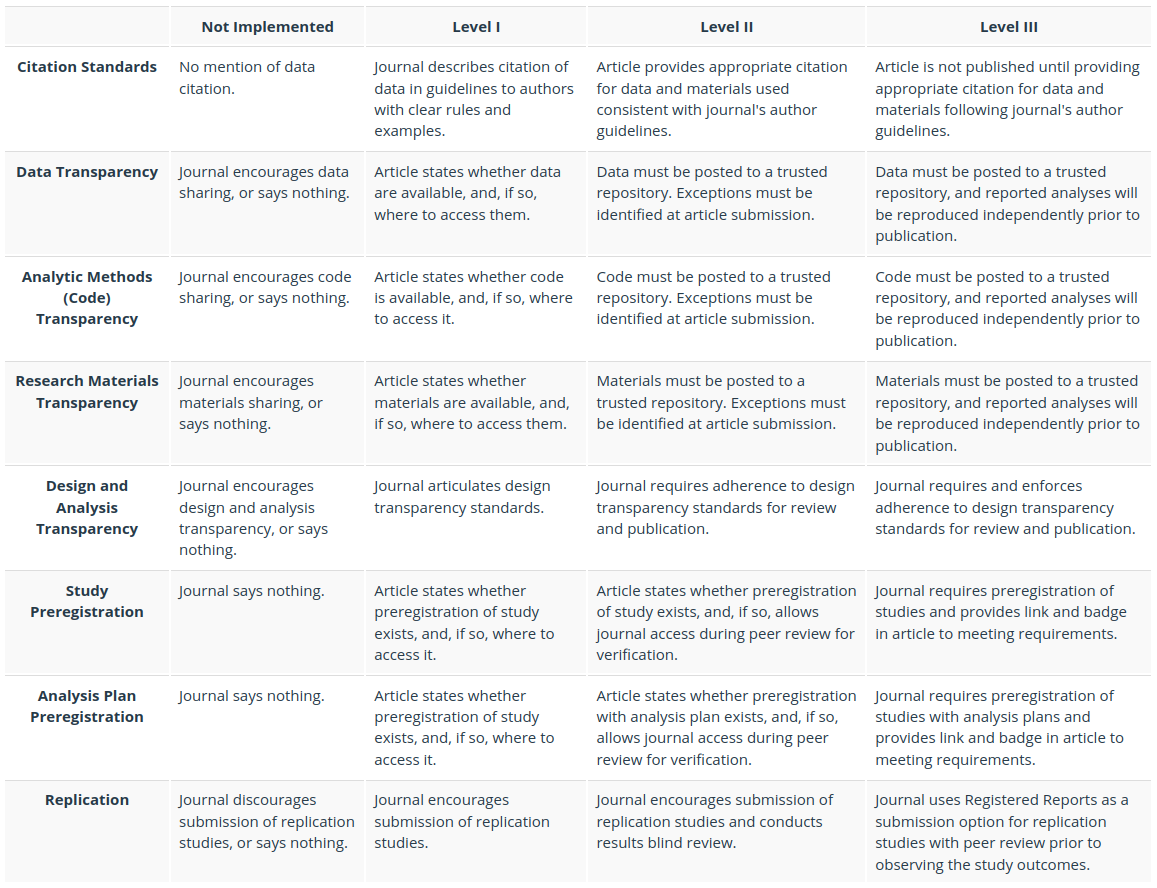

TOP Guidelines

The TOP factor

"Journal policies can be evaluated based on the degree to which they comply with the TOP Guidelines. This TOP Factor is a metric that reports the steps that a journal is taking to implement open science practices, practices that are based on the core principles of the scientific community. It is an alternative way to assess journal qualities, and is an improvement over traditional metrics that measure mean citation rates. The TOP Factor is transparent [...] and will be responsive to community feedback." TOP Guidelines

Read more about TOP Factor here.

Archaeology and Anthropology journals in the TOP archive

Promoting an open research culture

Reward system

This image was created by Scriberia for The Turing Way community and is used under a CC-BY licence

Promoting an open research culture

Education

This image was created by Scriberia for The Turing Way community and is used under a CC-BY licence

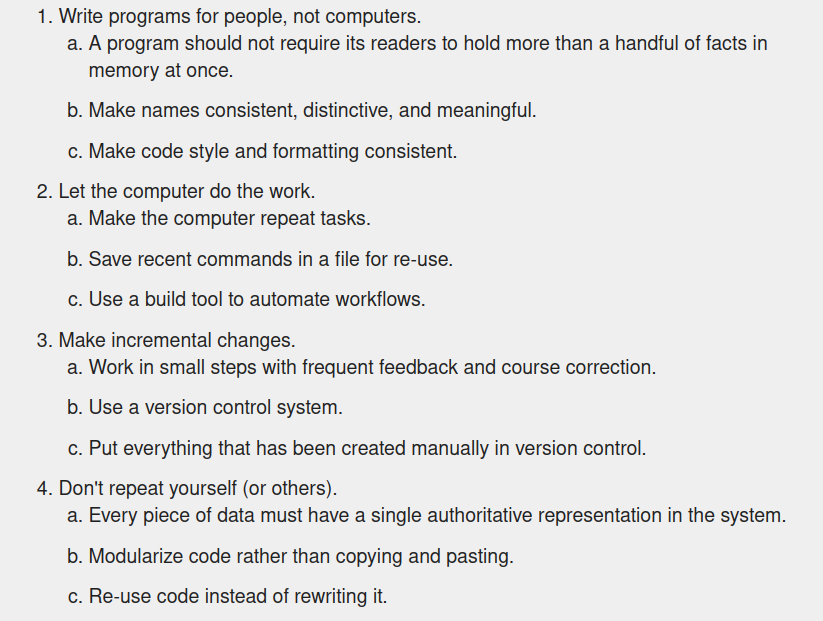

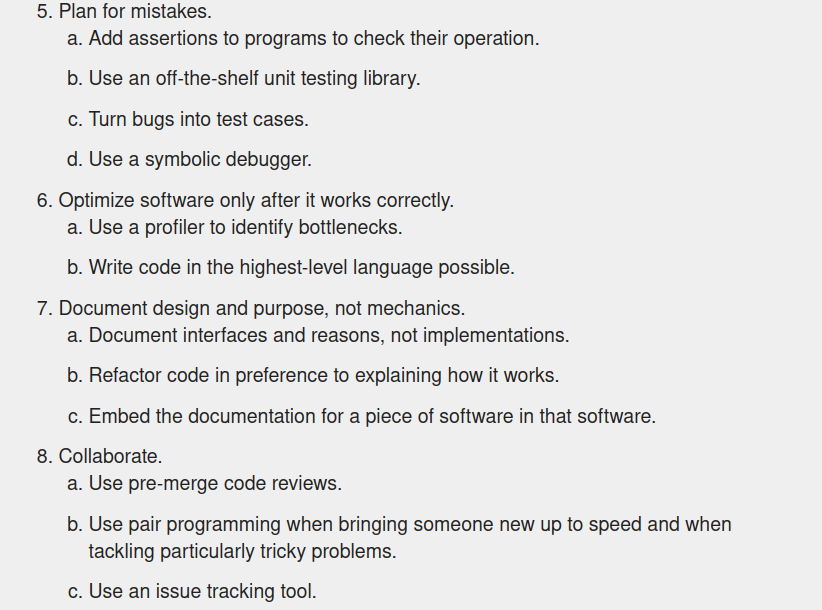

Best Practices for Scientific Computing (1 of 2)

Best Practices for Scientific Computing (2 of 2)

How do I make my research reproducible?

1. Plan for reproducibility before you start

- Project management (RStudio, OSF, GitHub)

2. Keep track of things

- Pre-registration (OSF)

- Version control system (Git & GitHub)

- Documentation (README, Codebook)

- Use software whose operation can be coded

- Literate programming & Dynamic documents (RMarkdown)

- Save raw data, don't save outputs

- Save data in open formats (CSV)

3. Share and license your research

- Data repository (OSF, Zenodo, Figshare)

- Code/software repository (GitHub)

- Container (Rocker)

- Publish a research compendium

4. Report your research transparently

- Pre-print repository (OSF, PCI)

- Protocols (https://www.protocols.io/)

What is a research compendium?

This image was created by Scriberia for The Turing Way community and is used under a CC-BY licence

- A research compendium is a collection of all digital parts of a research project including data, code, texts.

- A research compendium can be constructed with minimal technical knowledge (a basic folder structure combining all components can be sufficient).

- Publishing your research paper along with a research compendium allows others to access your input, test your analysis, and, if the compendium can be executed, rerun to assess the resulting output. This does not only instill trust in your research but can give you more visibility.

Summary

Summary

- Definitions of reproducible, replicable, robust and generalisable studies

- Definitions of empirical, computational and statistical reproducibility

- Reproducibility at the core of the scientific method

- Issues with research reproducibility

- Ways to promoting an open research culture

- How to make reproducible research

- What is a research compendium

FAQ*

Everything is in the paper; anyone can reproduce this from there!

This is one of the most common misconceptions. Even having an extremely detailed description of the methods and workflows employed to reach the final result will not be sufficient in most cases to reproduce it. This can be due to several aspects, including different computational environments, differences in the software versions, implicit biases that were not clearly stated, etc.

I don’t have the time to learn and establish a reproducible workflow.

In addition to a significant number of freely available online services that can be combined and facilitate the setting up of an entire workflow, spending the time and effort to put this together will increase both the scientific validity of the final results as well as minimize the time of re-running or extending it in further studies.

Food for thought

The basic building blocks of archaeological knowledge are non-replicable observations, this does not mean we are immune to the reproducibility crisis.

But archaeological research is not just a list of sites and artefacts. In order to extract understanding from our irreproducible corpus of material, we subject it to an extraordinary range of analytical methods, most of which are replicable. This is where archaeologists have been most active in promoting reproducibility, as part of a larger trend towards open science.

We can’t rerun the history that produced that material, or even the process through which we obtained it. So how can we obtain reproducible results from non-replicable observations?

J. Roe. 2016. Does archaeology have a reproducibility crisis? [Online; accessed 20 May 2021]

Food for thought

-> Consider how the concepts of empirical and computational reproducibility apply to Archaeology.

Further readings

- Merritt et al. 2019. Don't cry over spilled ink: Missing context prevents replication and creates the Rorschach effect in bone surface modification studies

- Domínguez-Rodrigo et al. 2017. Use and abuse of cut mark analyses: The Rorschach effect. Journal of Archaeological Science, 86, 14–23

References & further resources

Reading list

- Marwick, B. Computational Reproducibility in Archaeological Research: Basic Principles and a Case Study of Their Implementation. J Archaeol Method Theory 24, 424–450 (2017). https://doi.org/10.1007/s10816-015-9272-9

References

Further resources

- F. Markowetz - 5 selfish reasons to work reproducibly

- K. A. Baggerly - The Importance of Reproducible Research in High-Throughput Biology

- A Simple Explanation for the Replication Crisis in Science

- How computers broke science – and what we can do to fix it

- Does archaeology have a reproducibility crisis?

Practical exercises

Practical exercises

Outline

- Create a research compendium

- Publish a research compendium (Zenodo / OSF)